Not really sure if this was mentioned anywhere, but I thought it might be useful for anyone who wants to make use of vector math, specifically calculating the length of a 2D vector, while minimizing the risk of overflow.

Because the sqrt(32767) ~= 181, it is easy to overflow when using the textbook equation for calculating vector length:

| len = sqrt(x*x + y*y) |

To derisk overflowing when squaring the terms, you can first scale down the vector's x and y components:

| m = max(x,y) x = x / m y = y / m |

then scale the square root of their sums:

| len = sqrt(x*x + y*y) * m |

Lua code (simplified since one term will always == 1):

function length(v) local d = max(abs(v[1]),abs(v[2])) local n = min(abs(v[1]),abs(v[2])) / d return sqrt(n*n + 1) * d end |

This is great, I just ran into overflow for the first time yesterday when I tried to calculate the distance between two objects on the opposite sides of the map. Since I really just wanted to know if they were "near" each other, not the precise distance, I ended up using a fake measure of "distance" that's just abs(a.x-b.x)+abs(a.y-b.y) but this is a much better general solution. Thanks!

To simplify this further, you can simply right-shift everything by an arbitrary amount instead of calculating precise values. Just a flat 'divide by 256' will allow the full -32768 to 32767 range, and you can 'cheat' if you're dealing mostly with integers in the first place:

len = sqrt(x * 0x0.0001 * x + y * 0x0.0001 * y) * 0x100 |

Of course if you need full precision and don't mind giving up a few tokens then this is more accurate:

nx = x * 0x0.01 ny = y * 0x0.01 len = sqrt(nx * nx + ny * ny) * 0x100 |

The reason for using power-of-2 (so bit-shifts) instead of arbitrary multipliers is because this avoids the precision errors that the 16:16 format would otherwise inflict VERY quickly on math you likely need some precision on if you're bothering to do it in the first place. :)

I'm not so sure about doing a flat divide. The original solution can actually shift left if the largest component is fractional. That's useful if you actually need to calculate the lengths of rather small vectors. It moves both very large numbers away from overflow values, and very small numbers away from underflow values. Anything less than 1/256 will square to 0 in 16.16 format, after all.

In my own code I actually do a test to see if it's >=256 and then take a path that shifts down by 8, or <=1/256 and then take a path that shifts up by 8, or otherwise take the obvious path. But honestly, I think the OP's solution might be better. Also faster.

By the way, on a related note, if you're dividing vectors by their lengths to get unit vectors, there's a handy trick to avoid dividing by zero without testing for zero. This doesn't matter so much on PICO-8, which won't crash your game if you divide by zero, but since we're talking useful tricks, here's how you do it on a real computer:

V2 V2::Normalized()

{

float len = Length();

len += FLT_MIN; // this is the magic

return V2(x/len, y/len);

}

|

FLT_MIN is the absolute smallest positive value you can store in a float. Adding it doesn't make a meaningful difference to any unremarkable vector, but it prevents the FPU from freaking out on 0.0 lengths.

You could do the same thing in PICO-8 lua by adding 0x0.0001, though it's really not necessary. Dividing by zero on PICO-8 just silently returns the maximum representable value, if I recall correctly. There's no reasonable value for /0 anyway, so that's fine. This is just to avoid crashes.

Well this discussion is specifically about handling LARGE values that would overflow. If that's your concern, AKA integer distances such as most PICO-8 games would be likely to encounter, then the flat 'shift right by 8, then left when done' is a near-bit-perfect scaling factor.

32767 ^ 2 + 32767 ^ 2 (the largest possible distance you could ever try to compute on PICO8) = 2147352578

(32767 / 256) ^ 2 + (32767 / 256) ^ 2 = 32766.000030517578125 = 0x7ffe.0002 exactly. |

There's few if any situations where both large-integer distances AND sub-pixel integer distances matter; for sub-pixel distances you can indeed do the reverse but the precision gains are almost always lost immediately on shifting back due to the 16:16 precision limit.

I was thinking in terms of normalizing vectors. When you normalize a vector, you're quite often dealing with something with components well under 1.0.

Since I'd use the same code for normalizing large and small vectors (token limits and all), I'd want the neat solution the OP posted, which handles both large and small for quite little cost, as long as you simplify the given code by extracting a couple of common subexpressions.

Ah fair enough. For me it's mostly about keeping to bit-exact shifts, as otherwise you're likely to lose random and unpredictable precision from using arbitrary multipliers and divisors, and with only 16:16 precision to work with that'll stack up suddenly and sharply.

Edit: v1.1 - I forgot to abs() the choices before choosing a max dimension. Now fixed.

In case anyone's interested, this fairly-naive implementation is the smallest I was able to make the 3D version of the overflow-safe function:

function v3len(v) local d=max(max(abs(v[0]),abs(v[1])),abs(v[2])) local x,y,z=v[0]/d,v[1]/d,v[2]/d return sqrt(x*x+y*y+z*z)*d end |

I tried doing a partial bubble sort to separate the largest value from the other two and thus spare myself a divide and square the way the 2D version above does, but the best I managed with that approach was 2 tokens larger than this naive version and, due to math on PICO-8 being very fast, probably slower as well.

Related performance trick: x^0.5 is significantly faster than sqrt(x)!

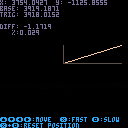

I’ll be trying this improved version on my old Star Wars game (large reality bubble = overflow!)

@Felice: your version is wrong for negative numbers! max(-864,5) => 5 (should be 864 for our case)

Oops, you're right. I forgot the abs()es. I've fixed that above. Thanks!

Random follow-up thinking...

I think if one is going to do 3D math on PICO-8, and is not planning to very carefully make sure no two interactions are more than a mathematically-safe distance apart each time they're calculated, it's probably safest to arrange for all of the world coords (live ones, at least) to be inside a sphere of maximum radius 16383, maybe a little less. That ensures you'll never have a distance or delta vector component that exceeds PICO-8's numeric limits.

Of course, bounding by a sphere can have its own issues, at least if it's a dynamic sub-sphere of a larger world, since sphere tests themselves are likely to test PICO-8's numeric limits.

If you prefer to limit to a bounding box, you'd need to limit x,y,z to ±9458 for 3D stuff, or x,y to ±11584 for 2D stuff.

So basically you have to sacrifice between one and two bits of range for the sphere, and between two and three for a bounding box.

Side note: There will also be gotchas with using cross products, since the length of the output vector equals the area of the parallelogram the two input vectors describe. Area is not linear vs. the sides, but quadratic, so it too will burst the PICO-8 number seams if not watched carefully.

Thus, when computing a triangle normal, one would be best off normalizing the two edge vectors before computing the cross product. This might catch someone out if they're accustomed to normalizing after the cross product, which is usually the optimal way to do it.

Really, in general, you want to be working with unit vectors when doing dot products and cross products, I'd think.

sqrt is expensive, even when written as an exponent (that used to be faster but now it is slightly worse), but trig is cheap. A faster algorithm, with some wild rounding errors around the edges:

function len2d_fast(dx,dy) local s = sin(atan2(dx,dy)) if (s == 0) return abs(dx) return abs(dy/s) end |

Okay, I've benchmarked it using @pancelor's profiler at https://www.lexaloffle.com/bbs/?tid=46117 and then sloppily written my own cart to compare the results. The algorithms give very similar almost everywhere, usually identical, but angles very close to -- but not exactly -- any multiple of 0.25 turns -- that is, where dx or dy is relatively very nearly zero and the other is far away from zero -- can show very large differences. Usually, neither algorithm is remotely accurate in these cases.

The original algorithm in the first comment in this thread takes 67 cycles. An optimized variant skips the redundant abs calls and the max/min calls, taking 54 cycles in the worst case (when abs(dx) > abs(dy)):

function len2d(dx, dy) local d,n=abs(dx),abs(dy) if (d<n) d,n=n,d n/=d return sqrt(n*n + 1) * d end |

You can shave off two cycles from the worst case (bringing its performance to equate the best case) by replacing the "swap so d is larger" logic with two copies of the remainder of the function, with d and n swapped, as if...else bodies. This avoids the extra assignment in the "swap" case. This seems like a bad time-for-tokens tradeoff in most cases, since if you're pressed for time, you would prefer the 18-cycle len2d-fast -- which is also fewer tokens.

My algorithm comes from observing that Pico-8's trig algorithms are fast and square roots are slow (not as slow as they used to be -- sqrt is now notably faster than x^0.5 -- but still, the 28 system cycles sting). Therefore, it's faster to take a "round trip" through trigenometry!

- Calculate the angle

aof the vector usingatan2(dx, dy). - The unit vector for an angle

ais(cos(a), sin(a)), but we'll only need one component. I arbitrarily chose the Y-component, so I tooksin(a), naming its. - If

s == 0, the vector is horizontal, so only the X component exists. Avoid dividing by zero by returning the magnitude of just the X component (usingabs(x)) in this special case. - Otherwise, return the ratio of the Y-component of the original vector and

s.

This works because:

- If two vectors are parallel, the ratio between any pair of corresponding nonzero components is equal to the ratio of any other such pair

- The magnitude of that common ratio is equal to the ratio of the magnitudes of the entire vectors

- We specifically constructed

(cos(a), sin(a))as a unit vector parallel to the input vector, so this means we can use the ratios of justsin(a)anddy(the Y-components) to get the ratios of the vectors' magnitudes - The unit vector has magnitude 1.

x/1 == xfor all real values ofx, so the ratio between the magnitude of a vector and the magnitude of the unit vector is just the magnitude of the original vector - Therefore, the magnitude ratio calculated via Y components only is equal to the magnitude of the entire input vector!

atan2 converts from (dx,dy) to an angle. cos and sin convert from an angle to (dx, dy) of the unit vector, respectively. That's most of the trig I know off the top of my head, but it's enough to optimize a lot of useful things in Pico-8...

I could trim off the most egregious rounding errors by rounding s to 0 when it is very near to zero, using dx as a better approximation when limits of precision will start doing very bad things to the dy/s calculation.

nice! low-tokens, fast, and the numerical issues aren't too horrible. check out this other thread about distance functions -- yours seems very similar to drakeblue's: https://www.lexaloffle.com/bbs/?pid=119363#p (I linked to my summary post in the middle of the thread, but the whole thing is good)

fwiw, this function in particular seems to do well in the nearly-a-multiple-of-0.25 cases:

function dist_trig(dx,dy) local ang=atan2(dx,dy) return dx*cos(ang)+dy*sin(ang) end |

(which makes sense, because there's no division involved)

It's my go-to distance function, although https://www.lexaloffle.com/bbs/?pid=130349#p is often good enough, if you only need to compare distances

bonus tip: x^^(x>>31) is faster and nearly identical to abs(x), so you can gain some more speed:

function len2d_fast3(dx,dy) local s = sin(atan2(dx,dy)) local r = s==0 and dx or dy/s return r^^(r>>31) end |

[Please log in to post a comment]